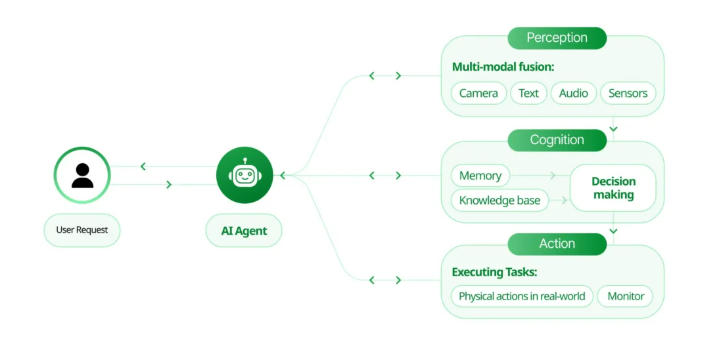

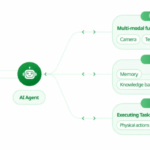

Enterprise operations across a wide range of functions and sensitive corporate data, including sales, finance, HR, IT, and cybersecurity, are being reshaped by agentic AI, or autonomous systems, which are a new type of identity in our environments. By rapidly automating processes, AI agents are driving innovation and increasing productivity. However, despite the obvious advantages, the widespread adoption of Agentic AI is introducing a new attack vector and identity complexities that traditional Identity and Access Management (IAM) or Identity Governance Administration (IGA) tools were never intended to address. According to Deloitte’s 2025 Technology Predictions report, 50% of businesses that use generative AI will deploy agentic solutions by 2027 due to their ability to automate complex departmental workflows. And that speed clearly outpaces IT and security readiness.

Organizations introduce a number of risks with the introduction of agentic AI. These brand-new autonomous identities operate with privileged access, make decisions, and carry out their actions without the supervision of a human being. If left unchecked or not properly configured, the AI agents can create unmanaged identities that fall outside of IAM frameworks, perform unintended actions, or access unauthorized data. Additionally, AI agents themselves can fall victim to cyber attack and be used for data exfiltration or ransomware deployment in our network, stemming from simple weaknesses like unchanged credentials or weak passwords and can extend to sophisticated attacks like prompt manipulation or injection.

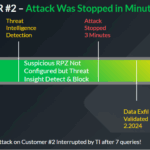

The lack of control over how AI tools are accessed and used is another emerging and alarming risk. Employees are increasingly using personal email addresses and identities to bypass corporate security and IAM policies to access AI systems. Our employees can circumvent IT controls by using agents that behave in this way, which make them essentially invisible browsers on endpoints. Because they were not designed to monitor non-corporate logins or shadow access, traditional security tools, IAM, and even Zero Trust practices make it easy for these rogue activities to go unnoticed. As a result, there is a significant risk of data exfiltration, in which sensitive corporate data could be accessed or transferred outside of approved channels, as well as new attack paths, additional unknown or unmanaged identities, and the possibility of compliance violations. Organizations now face unprecedented security challenges if they embrace AI for its significant transformative potential, whether within corporate security policies or not. What can we do to deal with these issues? When dealing with a problem of this complexity, it helps to start with the basics. How can agentic AI activity be made fully visible and observable? Identity Observability’s Contribution to AI Governance and Security Identity observability emerges as a crucial component for protecting environments populated by agentic AI. To address the first difficulty, how is an organization even able to identify and combine its AI agents that have been approved and those that have not? With AuthMind’s Comprehensive Discovery, businesses can find any and all identities, managed or unmanaged. Our unique platform uses contextual monitoring of all identity-related activities and access flows to consolidate multiple accounts, even shadow, unknown, and personal identities into a single identity view, across any cloud of platform, offering a clear and actionable, and prioritized view for security teams.

Organizations can learn the “who, what, when, where, and why” of every action, including AI agent actions, thanks to AuthMind’s identity observability. This ensures the necessary context as well as the historical tracking of the actual steps and access flows made within the infrastructure. As a result, the question of how AI agents access the various systems and carry out their operations is continuously answered. This capability is essential for identifying and auditing the actions of AI agents, spotting unusual behavior, mitigating risks, and correcting errors. For example, if an AI agent suddenly starts accessing sensitive data or performing unauthorized transactions, identity observability can help flag these activities for investigation and map them back to the user who accessed or created that agent.

The Identity Observability Platform of AuthMind assists businesses in controlling Agentic AI security by: Using a multi-layer detection strategy, discover the existence of an agentic AI framework. detect the existence of an agentic AI framework, map the existence to particular identities (human, NHI, and Agentic), as well as detect unusual or unanticipated AI agent activity over time and determine whether usage is permitted or in violation of security controls. Identify Agentic AI users and overcome Identity Mapping Obstacles Detect employees accessing approved or unapproved AI tools and if under the corporate security policies and controls

Find out when employees of a company use personal credentials to access AI tools. Map the same identity to every type of account. Monitoring Agentic AI Utilization Be aware of authorized agents carrying out illegal activities, AI agents being connected to unapproved systems, and users automating their own personal tasks. Map exact systems and other agents AI agents interacted with, and the security of those accesses.

Agentic AI Detailed Behavioral Profiling

Improve activity patterns with comprehensive network visibility, allowing you to recognize agents’ deviations from norm. Choose between compromised users and compromised agents. In a nutshell, identity observability offers the crucial insights necessary to guarantee that agentic AI operates within clearly defined boundaries and abides by security policies. It provides the necessary context to determine whether an agent has been compromised or is carrying out its assigned tasks. In this way, identity observability is not merely a security tool; it is an essential enabler of responsible and secure agentic AI adoption.